Data-Driven Science and Engineering. Chapter 1 Exercises

Pythonを使ってData-Driven Science and Engineering 第2版 (2022) 第1章の演習問題を解いてみた。

%matplotlib inline

import io

from itertools import product

from pathlib import Path

import urllib

import warnings

import pandas as pd

import numpy as np

import scipy

import scipy.io as sio

from matplotlib import rcParams

from matplotlib import pyplot as plt

from matplotlib.image import imread

import seaborn as sns

A4 = [8.27, 11.69]

rcParams.update(

{

"axes.spines.top": False,

"axes.spines.right": False,

"axes.formatter.use_mathtext": True,

"axes.formatter.limits": [-3, 3],

"lines.linewidth": 1,

"lines.markersize": 5,

"lines.markeredgewidth": 0.5,

"lines.markeredgecolor": "white",

"legend.frameon": False,

"font.size": 11,

"text.usetex": False,

"font.family": ["Helvetica Neue", "IPAexGothic", "DejaVu Sans", "sans-serif"],

}

)

def download(url):

obj = io.BytesIO()

user_agent = "Mozilla/5.0 (Windows NT 6.1; Win64; x64)"

headers = {"User-Agent": user_agent}

req = urllib.request.Request(url, None, headers)

with urllib.request.urlopen(req) as response:

obj.write(response.read())

return obj

url_dog = "https://abittechnical.work/wp-content/uploads/2023/08/dog.jpg"

url_faces = "https://abittechnical.work/wp-content/uploads/2023/08/allFaces.mat"

dogpict_original = imread(download(url_dog), format="jpg")

faces_mat_contents = scipy.io.loadmat(download(url_faces))

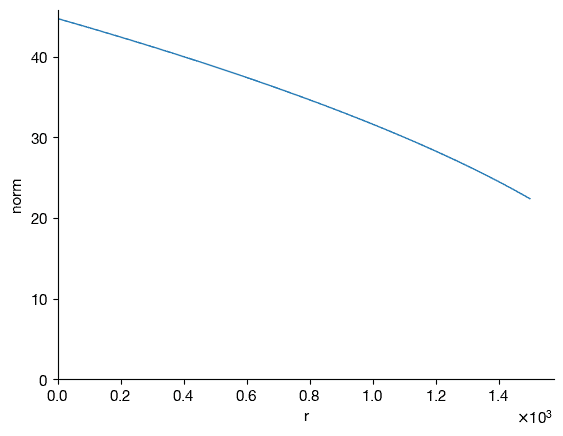

Exercise 1.1

Load the image dog.jpg and compute the full SVD. Choose a rank r < m and confirm that the matrix U ∗ U is the r × r identity matrix. Now confirm that UU ∗ is not the identity matrix. Compute the norm of the error between UU ∗ and the n × n identity matrix as the rank r varies from 1 to n and plot the error.

A = dogpict_original

X = np.mean(A, -1)

# Convert RGB to grayscale

n, m = X.shape

U, S, Vh = np.linalg.svd(X, full_matrices=False)

print(f"n, m = {n}, {m}")

# Is U∗U is an identity matrix?

r = 100

np.allclose(U[:, :r].T @ U[:, :r], np.identity(r), rtol=1e-05, atol=1e-08)

n, m = 2000, 1500

True

# Is UU∗ is an identity matrix?

r = 100

np.allclose(U[:, :r] @ (U[:, :r].T), np.identity(n), rtol=1e-05, atol=1e-08)

False

rs = range(1, m)

norms = []

for r in rs:

norms.append(np.linalg.norm(U[:, :r] @ U[:, :r].T - np.identity(n)))

/var/folders/jp/3z69nrvn7hn77ykj22gsj20h0000gn/T/ipykernel_75812/3951522986.py:4: RuntimeWarning: invalid value encountered in matmul norms.append(np.linalg.norm(U[:, :r] @ U[:, :r].T - np.identity(n)))

fig, ax = plt.subplots()

ax.plot(rs, norms)

ax.set_xlim(0, ax.get_xlim()[1])

ax.set_ylim(0, ax.get_ylim()[1])

ax.set_xlabel("r")

ax.set_ylabel("norm")

Text(0, 0.5, 'norm')

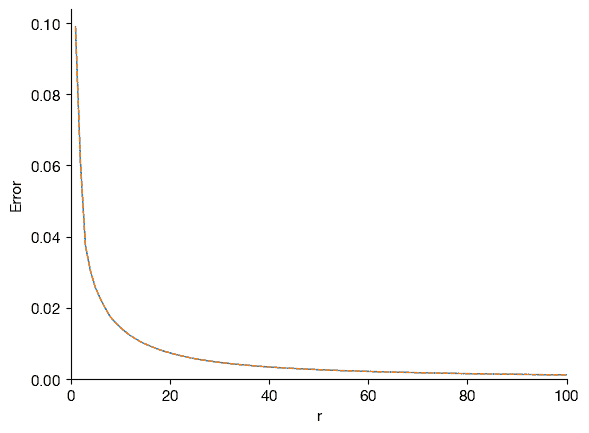

Exercise 1.2

Load the image dog.jpg and compute the economy SVD. Compute the relative reconstruction error of the truncated SVD in the Frobenius norm as a function of the rank r. Square this error to compute the fraction of missing variance as a function of r. You may also decide to plot 1 minus the error or missing variance to visualize the amount of norm or variance captured at a given rank r. Plot these quantities along with the cumulative sum of singular values as a function of r. Find the rank r where the reconstruction captures 99% of the total variance. Compare this with the rank r where the reconstruction captures 99% in the Frobenius norm and with the rank r that captures 99% of the cumulative sum of singular values.

A = dogpict_original

X = np.mean(A, -1)

# Convert RGB to grayscale

n, m = X.shape

U, S, Vh = np.linalg.svd(X, full_matrices=False)

ranks = np.hstack([np.arange(1, 101), np.arange(101, m + 1, 10)])

errs = []

for r in ranks:

XSVD = U[:, :r] @ np.diag(S[:r]) @ Vh[:r, :]

errs.append(np.linalg.norm(XSVD - X) / np.linalg.norm(X))

errs = np.array(errs)

fig, ax = plt.subplots()

ax.plot(

ranks,

errs**2,

"-",

range(1, len(S) + 1),

1 - np.cumsum(S**2) / np.sum(S**2),

"--",

)

ax.set_xlim(0, 100)

ax.set_ylim(0, ax.get_ylim()[1])

ax.set_xlabel("r")

ax.set_ylabel("Error")

Text(0, 0.5, 'Error')

# rank r that captures 99% of the total variance

np.arange(1, len(S) + 1)[np.cumsum(S**2) >= 0.99 * (S**2).sum()][0]

15

# rank r that captures 99% of the cumulative sum of singular values

ranks[errs < 0.01][0]

311

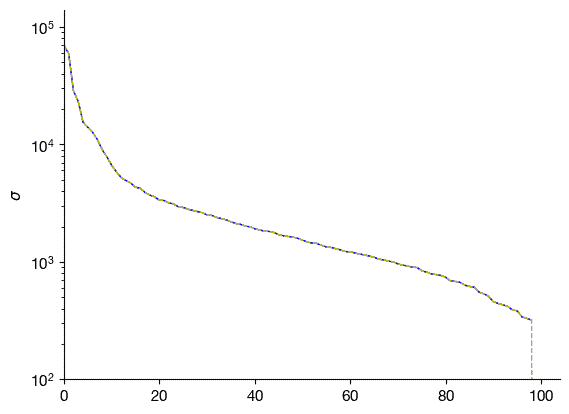

Exercise 1.3

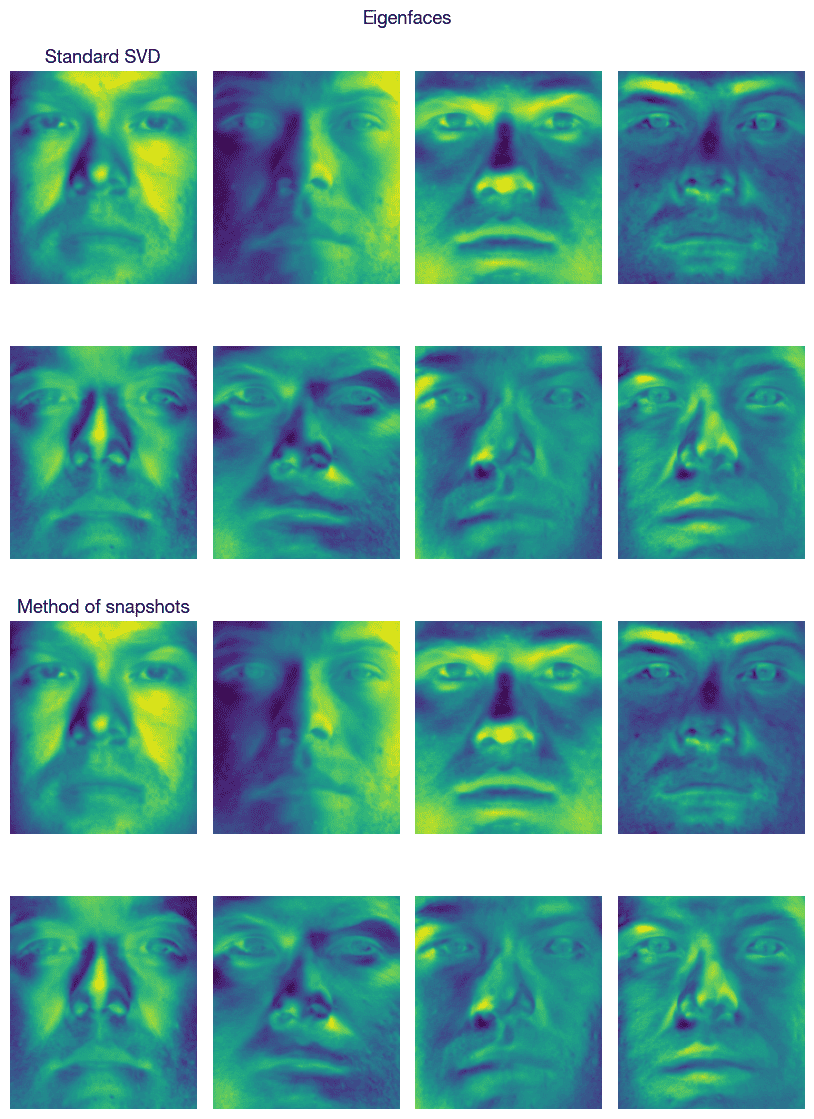

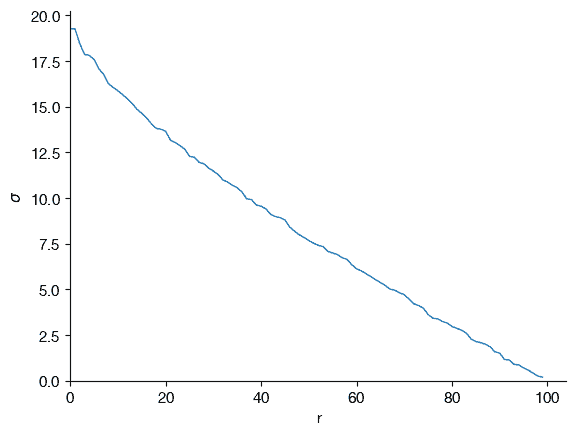

Load the Yale B image database and compute the economy SVD using a standard svd command. Now compute the SVD with the method of snapshots. Compare the singular value spectra on a log plot. Compare the first 10 left singular vectors using each method (remember to reshape them into the shape of a face). Now compare a few singular vectors farther down the spectrum. Explain your findings.

faces = faces_mat_contents["faces"]

m = faces_mat_contents["m"][0][0]

n = faces_mat_contents["n"][0][0]

n_faces_to_use = 100

faces_to_use = faces[:, :n_faces_to_use]

avgFace = np.mean(faces_to_use, axis=1)

X = faces_to_use - np.atleast_2d(avgFace).T

# Standard SVD

U, S, Vh = np.linalg.svd(X, full_matrices=False)

# The method of snapshots

Sss_2_, Vss_ = np.linalg.eig(X.T @ X)

ind = np.argsort(Sss_2_)[::-1]

Sss = np.sqrt(Sss_2_[ind])

Vss = Vss_[:, ind]

Uss_ = X @ Vss @ np.diag(1 / Sss)

Uss = Uss_ / np.linalg.norm(Uss_, axis=0)

/var/folders/jp/3z69nrvn7hn77ykj22gsj20h0000gn/T/ipykernel_75812/1740156833.py:7: RuntimeWarning: invalid value encountered in sqrt Sss = np.sqrt(Sss_2_[ind])

fig, ax = plt.subplots()

ax.semilogy(range(len(Sss)), Sss, "b-", range(len(S)), S, "y--")

ax.set_xlim(0, ax.get_xlim()[1])

ax.set_ylim(1e2, 2 * S.max())

ax.set_ylabel("$\sigma$")

Text(0, 0.5, '$\\sigma$')

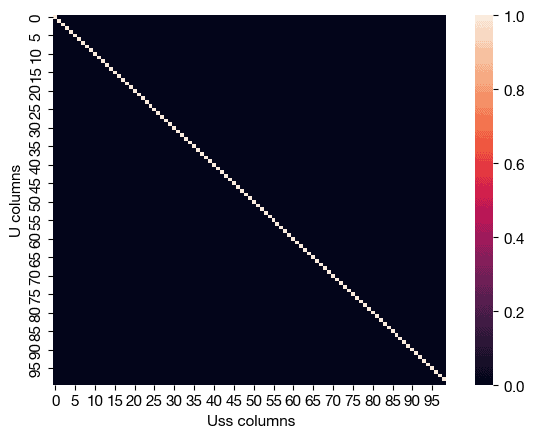

The inner product of unitary matrices Ums and Umss exhibits plus or minus ones along its diagonal, indicating the equality of their column vectors.

inner_product = U.T @ Uss

diagonal = np.diag(inner_product)

diagonal

array([-1., -1., -1., -1., -1., -1., -1., 1., 1., -1., -1., -1., -1.,

1., -1., -1., 1., -1., -1., -1., 1., -1., 1., -1., -1., -1.,

1., 1., -1., 1., -1., 1., 1., 1., 1., -1., -1., -1., -1.,

1., 1., 1., 1., -1., 1., 1., 1., 1., -1., -1., 1., -1.,

1., -1., 1., 1., -1., 1., 1., -1., 1., 1., -1., 1., -1.,

-1., -1., 1., 1., -1., 1., -1., -1., -1., 1., 1., -1., 1.,

1., 1., -1., 1., -1., 1., 1., 1., 1., -1., -1., 1., -1.,

-1., -1., 1., -1., -1., -1., -1., 1., nan])fig, ax = plt.subplots()

sns.heatmap(np.abs(inner_product), ax=ax)

ax.set_xlabel("Uss columns")

ax.set_ylabel("U columns")

Text(50.722222222222214, 0.5, 'U columns')

fig = plt.figure(figsize=A4)

for i, method in enumerate(["Standard SVD", "Method of snapshots"]):

for j in range(8):

ax = fig.add_subplot(4, 4, 8 * i + j + 1)

if method == "Standard SVD":

eigenface_vector = U[:, j]

else:

eigenface_vector = Uss[:, j] * diagonal[j]

ax.imshow(eigenface_vector.reshape(m, n).T)

ax.axis("off")

if j == 0:

ax.set_title(method)

fig.suptitle(f"Eigenfaces")

plt.tight_layout()

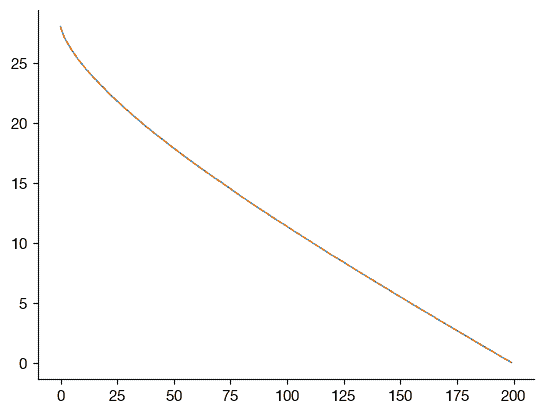

Exercise 1.4

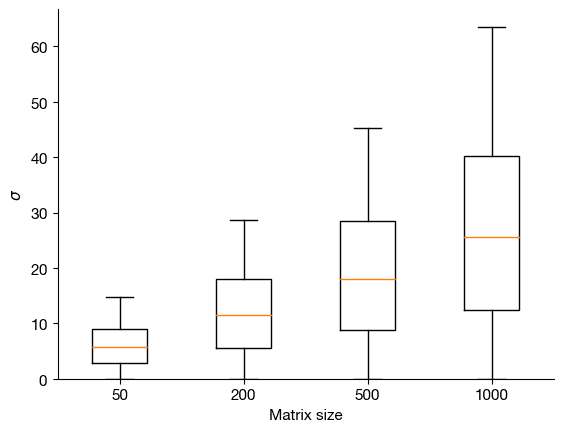

Generate a random100×100 matrix,i.e., a matrix whose entries are sampled from a normal distribution. Compute the SVD of this matrix and plot the singular values. Repeat this 100 times and plot the distribution of singular values in a box-and-whisker plot. Plot the mean and median singular values as a function of r. Now repeat this for different matrix sizes (e.g., 50 × 50, 200 × 200, 500 × 500, 1000 × 1000, etc.).

n = 100

X = np.random.randn(n, n)

U, S, Vh = np.linalg.svd(X, full_matrices=False)

fig, ax = plt.subplots()

ax.plot(S)

ax.set_xlim(0, ax.get_xlim()[1])

ax.set_ylim(0, ax.get_ylim()[1])

ax.set_xlabel("r")

ax.set_ylabel("$\sigma$")

Text(0, 0.5, '$\\sigma$')

Sdata = []

Ns = [50, 200, 500, 1000]

for n in Ns:

Ss = []

for j in range(100):

X = np.random.randn(n, n)

U, S, Vh = np.linalg.svd(X, full_matrices=False)

Ss.append(S)

Ss = np.array(Ss)

Sdata.append(Ss)

Ss = Sdata[1]

fig, ax = plt.subplots()

ax.plot(

range(Ss.shape[1]),

Ss.mean(axis=0),

"-",

range(Ss.shape[1]),

np.median(Ss, axis=0),

"--",

)

[<matplotlib.lines.Line2D at 0x15fa1f090>, <matplotlib.lines.Line2D at 0x15f950d50>]

fig, ax = plt.subplots()

ax.boxplot([np.ravel(Ss) for Ss in Sdata], labels=Ns)

ax.set_ylim(0, ax.get_ylim()[1])

ax.set_xlabel("Matrix size")

ax.set_ylabel("$\sigma$")

Text(0, 0.5, '$\\sigma$')

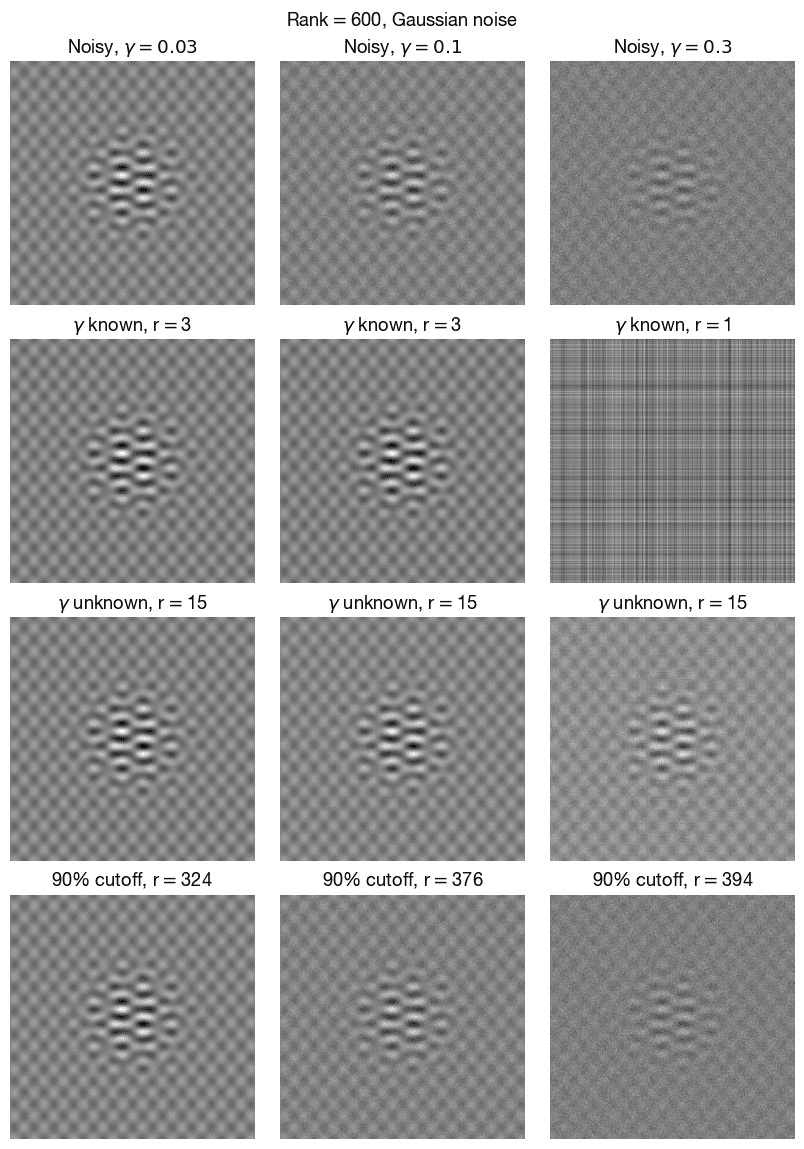

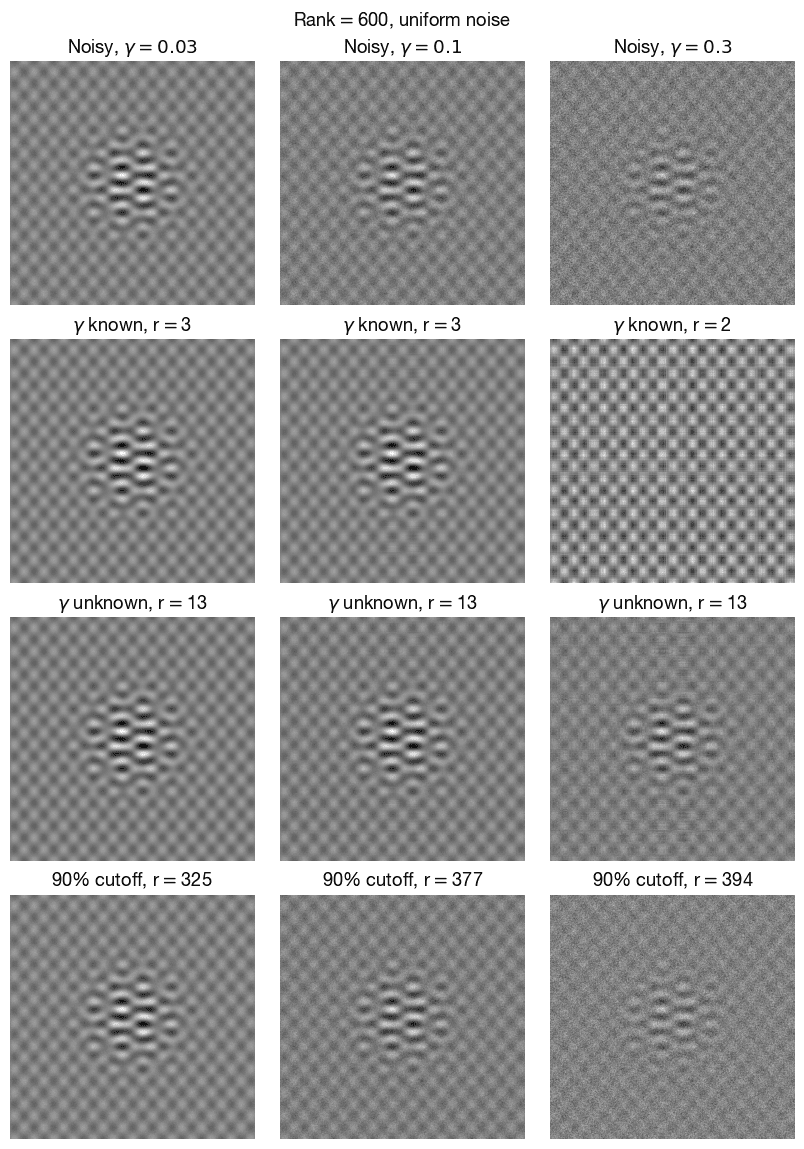

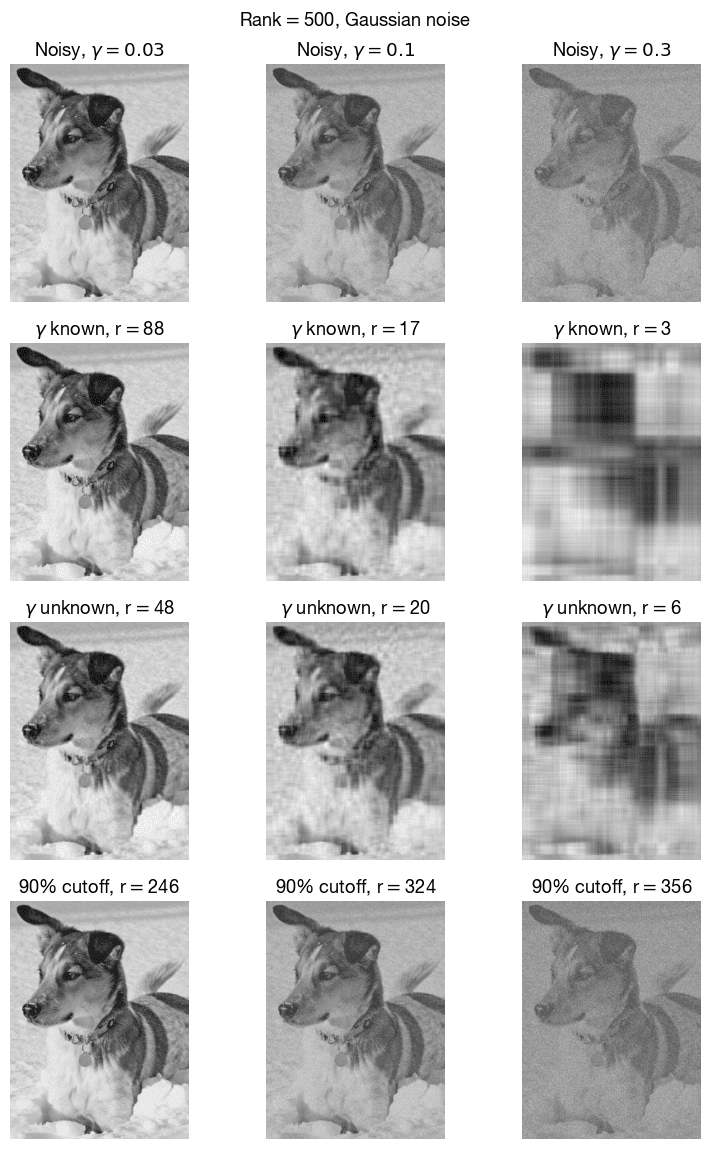

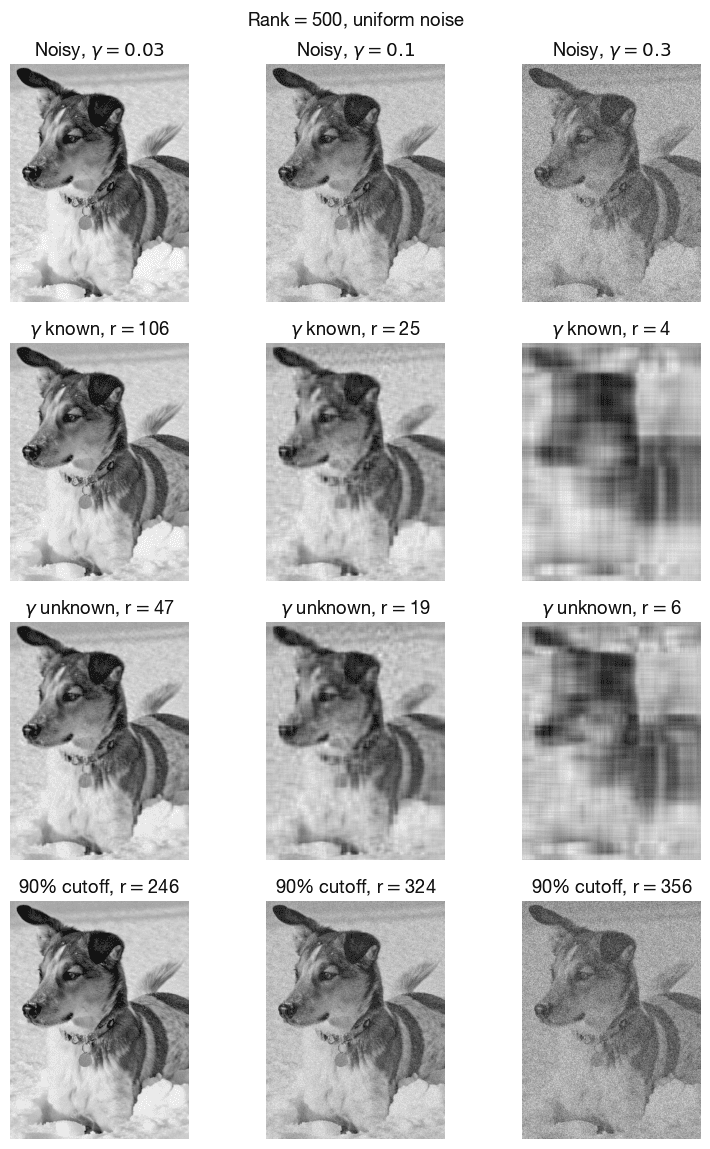

Exercise 1.5

Compare the singular value distributions for a 1000 × 1000 uniformly distributed random matrix and a Gaussian random matrix of the same size. Adapt the Gavish–Donoho algorithm to filter uniform noise based on this singular value distribution. Add uniform noise to a data set (either an image or the test low-rank signal) and apply this thresholding algorithm to filter the noise. Vary the magnitude of the noise and compare the results. Is the filtering good or bad?

from scipy.integrate import quad

from scipy.optimize import fsolve

def lambda_(beta):

return np.sqrt(

2 * (beta + 1)

+ 8 * beta / ((beta + 1) + np.sqrt(np.square(beta) + 14 * beta + 1))

)

def beta_integ(mu, beta):

f = lambda t, beta: (

(np.square(1 + np.sqrt(beta)) - t)

* np.sqrt((t - np.square(1 - np.sqrt(beta))))

/ 2

/ np.pi

/ t

)

with warnings.catch_warnings():

warnings.simplefilter(action="ignore", category=RuntimeWarning)

warnings.simplefilter(

action="ignore", category=scipy.integrate.IntegrationWarning

)

return quad(lambda mu: f(mu, beta), np.square(1 - beta), mu)

def mu(beta):

with warnings.catch_warnings():

warnings.simplefilter(action="ignore", category=RuntimeWarning)

return fsolve(lambda mu: beta_integ(mu, beta)[0] - 0.5, 1)

def omega(beta):

return lambda_(beta) / mu(beta)[0]

def find_tau(n, m, S, gamma=np.nan):

beta = min(n / m, m / n)

if np.isnan(gamma):

return omega(beta) * np.median(S)

elif n == m:

return 4 * np.sqrt(n / 3) * gamma

else:

return lambda_(beta) * np.sqrt(n) * gamma

def dogpict(int_reduction_ratio=3):

A = dogpict_original

Xtrue = np.mean(A[::int_reduction_ratio, ::int_reduction_ratio, :], axis=-1) / 255

return Xtrue

def checkpattern():

t = np.arange(-3, 3, 0.01)

Utrue = np.array([np.cos(17 * t) * np.exp(-(t**2)), np.sin(11 * t)]).T

Strue = np.array([[2, 0], [0, 0.5]])

Vtrue = np.array([np.sin(5 * t) * np.exp(-(t**2)), np.cos(13 * t)]).T

Xtrue_ = Utrue @ Strue @ Vtrue.T

Xtrue = (Xtrue_ - Xtrue_.min()) / (Xtrue_.max() - Xtrue_.min())

return Xtrue

def standardize(a):

return (a - a.mean()) / a.std()

def normalize(a):

return a / (a.max() - a.min())

def plot_Exercise1_5(

Xtrue, noise_distribution="Gaussian", gammas=[0.03, 0.1, 0.3], cutoff_threshold=0.9

):

n, m = Xtrue.shape

if noise_distribution == "Gaussian":

Xnoise = np.random.randn(n, m)

elif noise_distribution == "uniform":

Xnoise = standardize(np.random.rand(n, m))

fig, axes = plt.subplots(nrows=4, ncols=len(gammas), figsize=A4)

for i, gamma in enumerate(gammas):

X = normalize(Xtrue + gamma * Xnoise)

U, S, Vh = np.linalg.svd(X)

axes[0][i].imshow(X.reshape(n, m), cmap="gray")

axes[0][i].axis("off")

axes[0][i].set_title(f"Noisy, $\gamma={gamma}$")

for j, gamma in enumerate([gamma, np.nan], start=1):

tau = find_tau(n, m, S, gamma=gamma)

r = sum(S > tau)

XSVD = U[:, :r] @ np.diag(S[:r]) @ Vh[:r, :]

axes[j][i].imshow(XSVD.reshape(n, m), cmap="gray")

axes[j][i].axis("off")

axes[j][i].set_title(f'$\gamma$ {["known","unknown"][j-1]}, r$=${r}')

r = np.where(S.cumsum() > cutoff_threshold * S.sum())[0][0]

XSVD = U[:, :r] @ np.diag(S[:r]) @ Vh[:r, :]

axes[3][i].imshow(XSVD.reshape(n, m), cmap="gray")

axes[3][i].axis("off")

axes[3][i].set_title(f"{cutoff_threshold:.0%} cutoff, r$=${r}")

fig.suptitle(f"Rank$=${len(S)}, {noise_distribution} noise")

plt.tight_layout()

for Xtrue, noise_distribution in product(

[checkpattern(), dogpict()], ["Gaussian", "uniform"]

):

plot_Exercise1_5(Xtrue, noise_distribution)